Real-time feed processing and filtering

This blog has moved to a new domain. You can view this post here.

This is another lengthy post so I’m writing a brief overview as an introduction.

Introduction

This post is about web syndication feeds (RSS and ATOM), technologies for real-time delivery of feeds (PubSubHubBub, RSSCloud) and two opportunities I believe could help make these technologies better and widen their adoption: real-time feed processing/filtering and end-user selection of processing/filtering services. First, I’ll write about my experience with polling-based feed processing services from developing Feed-buster, a feed-enhancing service for use in FriendFeed. Next, I’ll give a short overview of PubSubHubBub (PSHB) and several ways of integrating feed filtering and processing functionalities into the PSHB ecosystem without changing the PSHB protocol. Lastly, I’ll try to argument why there should be a better solution and outline an idea for an extension to the PSHB protocol.

Before I continue, I have to acknowledge that I’ve discussed some of these ideas with Julien Genestoux (the guy behind Superfeedr) and Brett Slatkin (one of PSHB developers) several months ago. Julien and I were even starting to draft a proposal for a PSHB extension for supporting filters, however, due to other stuff in our lives, we didn’t really get anywhere.

Polling-based feed processing and filtering – experience from FriendFeed and Feed-Buster

FriendFeed is a social-networking web application that aggregates updates from social media sites and blogs that expose an RSS or Atom feed of their content. You give FriendFeed the URI of an RSS/Atom feed and it fetches and displays short snippets of updates as they appear in the feed. In most cases this means that only the title and a short snippet of the description of the new item are shown. For feeds that have special XML tags that list media resources in the update, the images/videos/audio content from the new feed item is also displayed. The problem is that most RSS/Atom feeds don’t have these media XML tags since webapps that create the feeds (e.g. blogging engines) don’t bother defining them. This gave a not-very-user-friendly look to most FriendFeed user pages which were just a long list of update titles and possibly snippets.

So I built Feed-buster, an AppEngine service that inserts these MediaRSS tags into feeds that don’t have them. Since the only thing FriendFeed accepts from users are URIs pointing to feeds – the service must be callable by requesting a single URI with a HTTP GET request and must return an RSS/Atom feed in a HTTP response. Here’s how Feed-buster works:

- An URI to an RSS/Atom feed is supplied as a query parameter of a HTTP GET request to the service. E.g. http://feed-buster.appspot.com/mediaInjection?inputFeedUrl=http://myfeed.com/atom.

- The service fetches the target feed, finds all the images, video and audio URIs and inserts the special XML tags for each one back into the feed.

- The service returns the modified feed as the content of a HTTP response.

To use the service, the user would obtain an URI of an RSS/Atom feed, create the corresponding Feed-buster URI by appending the feed URI to the Feed-buster service URI and pass it to FriendFeed (1). Suppose that the feed is not real-time enabled (i.e. a PSHB or RSSCloud feed). In this case, every 45 minutes FF will poll for updates – send a HTTP GET request to the service (2), the service will fetch the original feed (3), process the feed (4) and return a HTTP response with the processed feed (5).

Feed-buster architecture

Feed-buster is a feed processing service: it takes a feed URI as input and returns the feed it points to as output, potentially modifying any subset of feed items (but not removing any). Feed filtering services are similar: they take a feed URI as input and return the feed it points to as output, potentially removing any subset of feed items (but not modifying any). The difference is subtle and both terms could be unified under “feed processing”. Furthermore, this model of feed processing/filtering was nothing new at the time I developed Feed-buster, there were numerous services that filtered/processed feeds based on user preferences. The best example is probably Yahoo! Pipes which enables anyone to create a filtering/processing pipe a publish it as a service just like Feed-buster.

Lessons learned:

- Feed-buster is very simple to use and this helped to make it popular (appending an URI to another URI is something everyone can do).

- Feed-buster’s model of use is based on separation of filtering/processing services as individual entities in the ecosystem which enables that these services be developed independently of publishers and subscribers. Applications that enable other applications make ecosystems grow.

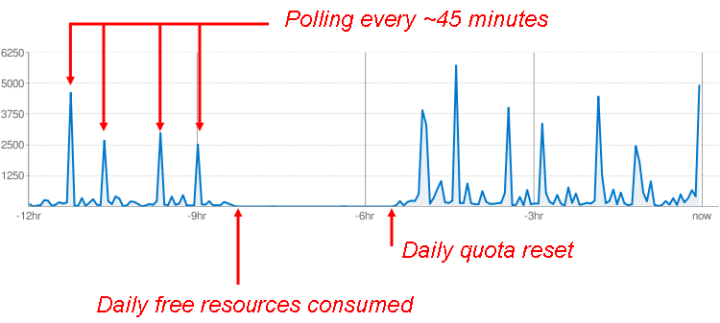

- Polling is bad (1). Because AppEngine applications have a fixed free daily quota for consumed resources, when the number of feeds the service processed increased – the daily quota was exhausted before the end of the day because FF polls the service for each feed every 45 minutes. See the AppEngine resource graph below for a very clear visual explanation :). Of course, I implemented several caching mechanisms in the service and it helped, but not enough.

- Polling is bad (2). Real-time feed delivery arrived in the form of PubSubHubBub and RSSCloud, everyone loved it and wanted everything to be real-time. But Feed-buster feeds weren’t real-time. Even if the original feed supported PSHB, the output feed didn’t (since it Feed-buster wasn’t a PSHB publisher or hub). Feed-buster users were not happy.

PubSubHubBub – real-time feed delivery

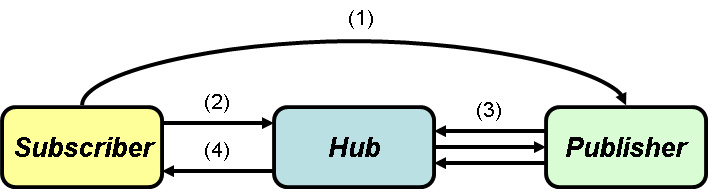

PubSubHubBub is a server-to-server publish-subscribe protocol for federated delivery of feed updates. Without going into a lot of detail, the basic architecture consists of publishers, subscribers and hubs which interact in the following way:

- A feed publisher declares its hub in its Atom or RSS XML file and a subscriber initially fetches the feed to discover the hub.

- The subscriber subscribes to the discovered hub declaring the feed URI it wishes to subscribe to and an URI at which it wants to receive updates.

- When the publisher next updates the feed, it pings the hub saying that there’s an update and the hub fetches the published feed.

- The hub multicasts the new/changed content out to all registered subscriber.

And this gives us real-time feed delivery. But what about real-time filtering and processing? How would a real-time-enabled version of Feed-buster be built? Of course, there are a lot more reasons for doing this, both from business and engineering perspectives.

Real-time feed processing and filtering (take 1)

So, the idea is to extend the PSHB ecosystem with filtering/processing services in some way. First, here are a few ideas for doing this without extending the PSHB protocol:

- Integrating filtering/processing services into publishers. This means that the publisher would expose not only the original feed, but also a feed for each filter/processing defined by the subscriber. For example, Twitter has a single feed of all updates for a specific user, a feed for updates from that user containing the string “hello”, updates not containing “world”, updates written in English, and so on.

- Integrating filtering/processing services into subscribers. This means that the subscriber application would subscribe to feeds and filter/process them on it’s own. It’s not exactly the same thing but, for example, FriendFeed subscribes to feeds you want it to aggregate and later you can filter the content from within the FriendFeed application — this is called search, as in Twitter, but basically means filter.

- Integrating filtering/processing services into hubs. This means that a hub would not only deliver feed updates, but process and filter them also. This would cause a large number of hubs to be developed, each implementing some filter or processing functionality. If you want to filter a feed – subscribe to a hub that implements that filter. If you want a new filter – build a new hub. However, if an end-user tells a subscriber application to subscribe to a feed with a specific URI, the subscriber will subscribe to a hub specified in the feed, not to the filtering/processing hub (since that hub wasn’t specified in any way). A possible way for enabling end-users to select which hub they want to use is to generate dummy feeds on filtering/processing hubs (e.g. http://www.hubforfiltering.com/http://www.myfeed.com/atom). The contents of this dummy feed is the same as the contents of the original feed but with a simple difference – the hub elements from the original feed are removed and a hub element for new hub is inserted. This way, when the end-user passes the dummy feed URI to the subscriber application, the application will subscribe to the filtering/processing hub, not the original one. The filtering/processing hub then subscribes to the original hub to receive updates, filters/processes received updates and notifies the subscriber.

- Masking the filtering/processing services as the subscriber which then notifies the real subscriber. I’m not sure this is possible (too lazy to go through the PSHB spec), but in theory, here’s how it would work. The subscriber would obtain an URI pointing to the filtering/processing service for which the service would know what the real subscriber notification callback is (e.g. http://www.filter.com?subscriber=http://www.realsubscriber.com/callback). The subscriber would subscribe to the hub using the obtained URI. When the publisher publishes an update, the hub would pass the update to the filtering/processing service thinking it was the subscriber. The filtering/processing service would do it’s thing and pass the update to the real subscriber. It’s unclear though how this would be done by end-users since they’d have to know the subscriber application’s callback URI.

All of these approaches have their pros and cons. Some are not easily or at all end-user definable, some may introduce unnecessary duplication of notifications, some introduce delivery of unwanted notifications, some do not support reuse of existing infrastructure and cause unnecessary duplication of systems, and most do not promote the development of filtering/processing services as a separate part of the ecosystem.

Real-time feed processing and filtering (take 2)

What I believe is needed is an architecture and protocol which supports three things. First – filtering/processing services as a separate part of the ecosystem. Since publishers and subscribers can’t possibly anticipate and implement every possible filter and processing service, this will enable a stream of development activity resulting in services with different functionality, performance and business models. Again, applications enabling other applications help software ecosystems grow.

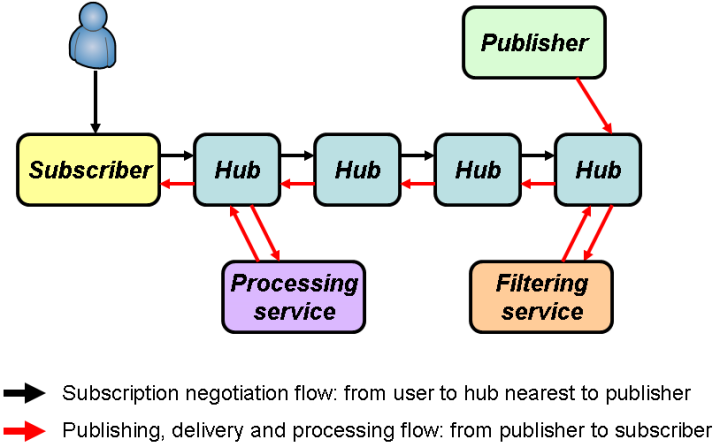

Second – a scalable processing model where hubs invoke filtering/processing services. Regarding architectural decisions on where filtering/processing should be applied, there’s a paper (citation, pdf) from 2000. on “Achieving Scalability and Expressiveness in an Internet-scale Event Notification Service” which states two principles:

Downstream replication: A notification should be routed in one copy as far as possible and should be replicated only downstream, that is, as close as possible to the parties that are interested in it.

Upstream evaluation: Filters are applied and patterns are assembled upstream, that is, as close as possible to the sources of (patterns of) notifications.

In short, these principles state that filtering services should be applied as close to the publisher as possible so notifications that nobody wants don’t waste network resources. However, processing services should be applied as close to the subscriber so that the original update may be transported through the network as a single notification for as long as possible. In cases where there’s a single hub between the publisher and subscriber, the same hub would invoke both processing and filtering services. However, in more complex hub network topologies different hubs would invoke different types of services based on their topological proximity to the subscriber/publisher.

Third and last – end-user selection of these services. When 3rd party filtering/processing services exist – how will they be chosen for a particular subscription? In my opinion, this must be done by end-users and thus there must be an easy way of doing it. The first thing that comes to mind would be to extend the subscription user-interface (UI) exposed to users of subscriber applications. E.g. instead of just showing a textbox for defining the feed the users wants to follow, there could be an “Advanced” menu with textboxes for defining filtering and processing services.

No matter how cool I think this would be as a core part of the PSHB protocol, it’s probably a better idea doing it as an extension (as a first step?). So here’s what the extension would define:

- The subscription part of the protocol additionally enables subscribers to define two lists of URIs when subscribing to a hub, one declaring the list of filtering services and the other the list of processing services that should be applied.

- Hubs are responsible not only for delivery of notifications but also for calling filtering/processing services on those notifications and receiving their responses. An inter-hub subscription negotiation/propagation mechanism that enables the optimal placement of subscription-defined filters and processing services in the delivery flow. In case of a single hub, nothing needs to be done since the hub invokes both types of services. However, in case of two hubs, the hub which first received the subscription would locally subscribe to the second hub stating only the filtering services while processing services would not be sent upstream. Thus, the hub closer to the subscriber would be responsible for invoking processing services, and the hub closer to the publisher would be responsible for invoking filtering services. This could, I believe, be generalized and formalized for any kind of hub network topology.

- When an update is published, hubs responsible for invoking filtering or processing services must do so before forwarding the notifications downstream. There are several performance-related issues which need to be addressed here. First, the invocation of filtering/processing services should be asynchronous, webhook-based (the hub calls a service passing an URI to which the result of processing should be POSTed). Second, invocation of services on a specific hub for a specific notification should be done in parallel, except if multiple processing services were defined for a single subscription in which case those processing services must be invoked serially (filters can always be invoked in parallel). Third, there’s a not-so-clear trade-off between a) waiting for all invoked filters to respond and sending a single notification carrying all metadata regarding which filters it satisfied and b) sending multiple notifications containing the same content but different metadata regarding which filters were satisfied (e.g. as each filter responds). Since all filters will not respond at the same time (some will respond sooner, some later and some possibly never) – waiting for all filters invoked in parallel before continuing seems contradictory to the constraint of real-time delivery. On the other hand, creating multiple notifications with the same data will introduce an overhead in the network. Overall, I believe that the overhead will not be substantial since there will not be many duplicates — this can even be regulated as a hub-level parameter e.g. one notification for services returning in under 2 seconds, one for under 10 second, and one for everything else, and the benefit is basically a necessity.

Conclusion

Of course I didn’t consider all aspects of the problem and there are a lot more points of view to be considered, from is it legal to change content in a feed and in what way to how do we make this extension secure and fault tolerant? Nevertheless, I believe that the need and expertise are here and that it’s an opportunity to make the real time web better. What are your ideas for extending real-time content delivery technologies? @izuzak

7 Responses

Subscribe to comments with RSS.

Ivan,

pubsubhubbub (0.1, anyway) doesn’t chain together in the way you’ve illustrated, because it’s not symmetrical — hubs don’t get subscribed to other hubs (or indeed, subscribe themselves). While you wouldn’t have to change the protocol, you would have to change the idea of what a hub is. But I guess you are setting out to do that anyway.

For processing I can subscribe the remote processing service to the hub, and subscribe myself to the remote processor. Taking into account the verification, it would probably go

1. Me -> Remote: Please give me a token for this hub to post to you

2. Me -> Remote: Please subscribe me to you

3. Me -> Hub: Please subscribe Remote using this token

This requires me and the remote processing service to understand some generalised bits of PSHB, but nothing extra of the hub (I don’t think).

Thanks for the comments, Michael — you’re right on both accounts.

1. Hubs aren’t chained in PSHB currently and doing so would indeed require changing the idea of what the hub is. Nevertheless, I think that if PSHB get more widely accepted this will eventually happen because it opens up the protocol to new possibilities, both business and engineering ones — networks are always better than islands. Sometimes I even forget that PSHB doesn’t mention hub chaining because of how natural it seems.

2. The subscription flow you describe is I believe a simplified case of the one I described as “Integrating processing/filtering services into hubs”. Since you describe a flow in which the subscriber subscribes to a remote service, and that service subscribes to a hub, the service in a way becomes the hub for the subscriber. And, although even if you wouldn’t need to change the hub, there would have to be some kind of protocol defined between the subscriber and the remote service for this to work. If this protocol isn’t standardized, every remote service could define the protocol differently and it would be chaos for subscriber applications. That’s why in my description of the “Integrating processing/filtering services into hubs” approach I made the remote service a full hub — that way the protocol between the subscriber and the service (which plays the role of the hub for the subscriber) is defined by PSHB itself. And that’s why I think this should be defined in the context of the PSHB protocol.

[…] Real-time feed processing and filtering Deixe um Comentário […]

[…] Real-time feed processing and filtering. […]

Justin,

I scanned this article pretty quickly and I might have missed something. But I wonder if something more like what is done with Domain Name Service (DNS) might be a simple modification to things like ATOM and RSS feeds. Specifically, a DNS record includes a Time-To-Live (TTL) value, which is an estimate of how long the DNS records just delivered should be considered current. A TTL value on a feed could be an estimate, created by the feed publisher, of how frequently the feed is updated. That information could be used to at least minimize the need for polling. Could be handled as an extension to ATOM/RSS, but yes, if the publishers don’t adopt it you aren’t any further along. So, what if the feed consumer/aggregator creates it’s own estimate of a TTL per feed? That would be easy to compute.

Anyway, just a shallow brain reaction on my part.

Thanks!

— Bob

hi Robert, thanks for commenting! using a TTL-like mechanism is a cool idea. i’m guessing you’re focused only on feed delivery, not the processing and filtering features which my post was focused on? here are some of my thoughts on TTL:

– there already exists a TTL-like mechanism for feeds, and in fact – for the whole WWW! the caching features built into HTTP enable this mechanism using various HTTP headers (see: http://www.mnot.net/cache_docs/). in essence, for any HTTP resource (including feeds which are just that) the publisher can set the “TTL” which specifies how long the client may consider the resource current.

– the nice thing with using TTL in DNS is that DNS records don’t update often or not as often as feeds (right?). the problem with using a TTL-like mechanism with feeds, as you noticed, may be that the feed publisher doesn’t know when the next update will happen and thus can not set the TTL accordingly. as you say, an estimate can be made based on the history of the feed. that may work for feeds that don’t update often and have a regular update cycle. however, what if the feed has a highly irregular update cycle? you could develop dynamic TTL mechanisms that change based on complex predictions based on history, but that’s still guessing. you’d have to poll (often) just in case. and even worse — consider what happens if the feed is updated very often and that the feed is very popular. the TTL would be set very low and you’d end up fetching the resource continuously, like all the other gazillion clients, causing scalability issues for the server (basically, the TTL would be useless). all in all, i think a TTL-like mechanism that estimates the needed update frequency would be a cool feature for clients which are restricted to pull-based feed delivery and for feeds that update rarely and regularly. it definitely would improve a static periodical polling strategy which is used by FriendFeed (poll every 40-45 mins). for clients that are not restricted to polling, a push-based solution (PSHB) seems like a way more cleaner and scalable solution.

best,

Ivan aka Justin :)

Sorry, I mean Ivan! Where did the name Justin come from? :)